- Information Technology

- Data Infrastructure

- Data Tools

- Desktops, Laptops and OS

- Chip Sets

- Collaboration Tools

- Desktop Systems - PCs

- Email Client

- Embedded Systems

- Hardware and Periferals

- Laptops

- Linux - Open Source

- Mac OS

- Memory Components

- Mobile Devices

- Presentation Software

- Processors

- Spreadsheets

- Thin Clients

- Upgrades and Migration

- Windows 7

- Windows Vista

- Windows XP

- Word Processing

- Workstations

- Enterprise Applications

- IT Infrastructure

- IT Management

- Networking and Communications

- Bluetooth

- DSL

- GPS

- GSM

- Industry Standard Protocols

- LAN - WAN

- Management

- Mobile - Wireless Communications

- Network

- Network Administration

- Network Design

- Network Disaster Recovery

- Network Interface Cards

- Network Operating Systems

- PBX

- RFID

- Scalability

- TCP - IP

- Telecom Hardware

- Telecom Regulation

- Telecom Services

- Telephony Architecture

- Unified Communications

- VPNs

- VoIP - IP Telephony

- Voice Mail

- WAP

- Wi-Fi (802.11)

- WiMAX (802.16)

- Wide Area Networks (WAN)

- Wireless Internet

- Wireless LAN

- Security

- Servers and Server OS

- Software and Web Development

- .Net Framework

- ASPs

- Application Development

- Application Servers

- Collaboration

- Component-Based

- Content Management

- E-Commerce - E-Business

- Enterprise Applications

- HTML

- IM

- IP Technologies

- Integration

- Internet

- Intranet

- J2EE

- Java

- Middleware

- Open Source

- Programming Languages

- Quality Assurance

- SAAS

- Service-Oriented Architecture (SOA)

- Software Engineering

- Software and Development

- Web Design

- Web Design and Development

- Web Development and Technology

- XML

- Storage

- Agriculture

- Automotive

- Career

- Construction

- Education

- Engineering

- Broadcast Engineering

- Chemical

- Civil and Environmental

- Control Engineering

- Design Engineering

- Electrical Engineering

- GIS

- General Engineering

- Industrial Engineering

- Manufacturing Engineering

- Materials Science

- Mechanical Engineering

- Medical Devices

- Photonics

- Power Engineering

- Process Engineering

- Test and Measurement

- Finance

- Food and Beverage

- Government

- Healthcare and Medical

- Human Resources

- Information Technology

- Data Infrastructure

- Data Tools

- Desktops, Laptops and OS

- Chip Sets

- Collaboration Tools

- Desktop Systems - PCs

- Email Client

- Embedded Systems

- Hardware and Periferals

- Laptops

- Linux - Open Source

- Mac OS

- Memory Components

- Mobile Devices

- Presentation Software

- Processors

- Spreadsheets

- Thin Clients

- Upgrades and Migration

- Windows 7

- Windows Vista

- Windows XP

- Word Processing

- Workstations

- Enterprise Applications

- IT Infrastructure

- IT Management

- Networking and Communications

- Bluetooth

- DSL

- GPS

- GSM

- Industry Standard Protocols

- LAN - WAN

- Management

- Mobile - Wireless Communications

- Network

- Network Administration

- Network Design

- Network Disaster Recovery

- Network Interface Cards

- Network Operating Systems

- PBX

- RFID

- Scalability

- TCP - IP

- Telecom Hardware

- Telecom Regulation

- Telecom Services

- Telephony Architecture

- Unified Communications

- VPNs

- VoIP - IP Telephony

- Voice Mail

- WAP

- Wi-Fi (802.11)

- WiMAX (802.16)

- Wide Area Networks (WAN)

- Wireless Internet

- Wireless LAN

- Security

- Servers and Server OS

- Software and Web Development

- .Net Framework

- ASPs

- Application Development

- Application Servers

- Collaboration

- Component-Based

- Content Management

- E-Commerce - E-Business

- Enterprise Applications

- HTML

- IM

- IP Technologies

- Integration

- Internet

- Intranet

- J2EE

- Java

- Middleware

- Open Source

- Programming Languages

- Quality Assurance

- SAAS

- Service-Oriented Architecture (SOA)

- Software Engineering

- Software and Development

- Web Design

- Web Design and Development

- Web Development and Technology

- XML

- Storage

- Life Sciences

- Lifestyle

- Management

- Manufacturing

- Marketing

- Meetings and Travel

- Multimedia

- Operations

- Retail

- Sales

- Trade/Professional Services

- Utility and Energy

- View All Topics

Share Your Content with Us

on TradePub.com for readers like you. LEARN MORE

Request Your Free Guide Now:

"LLM Security Best Practices"

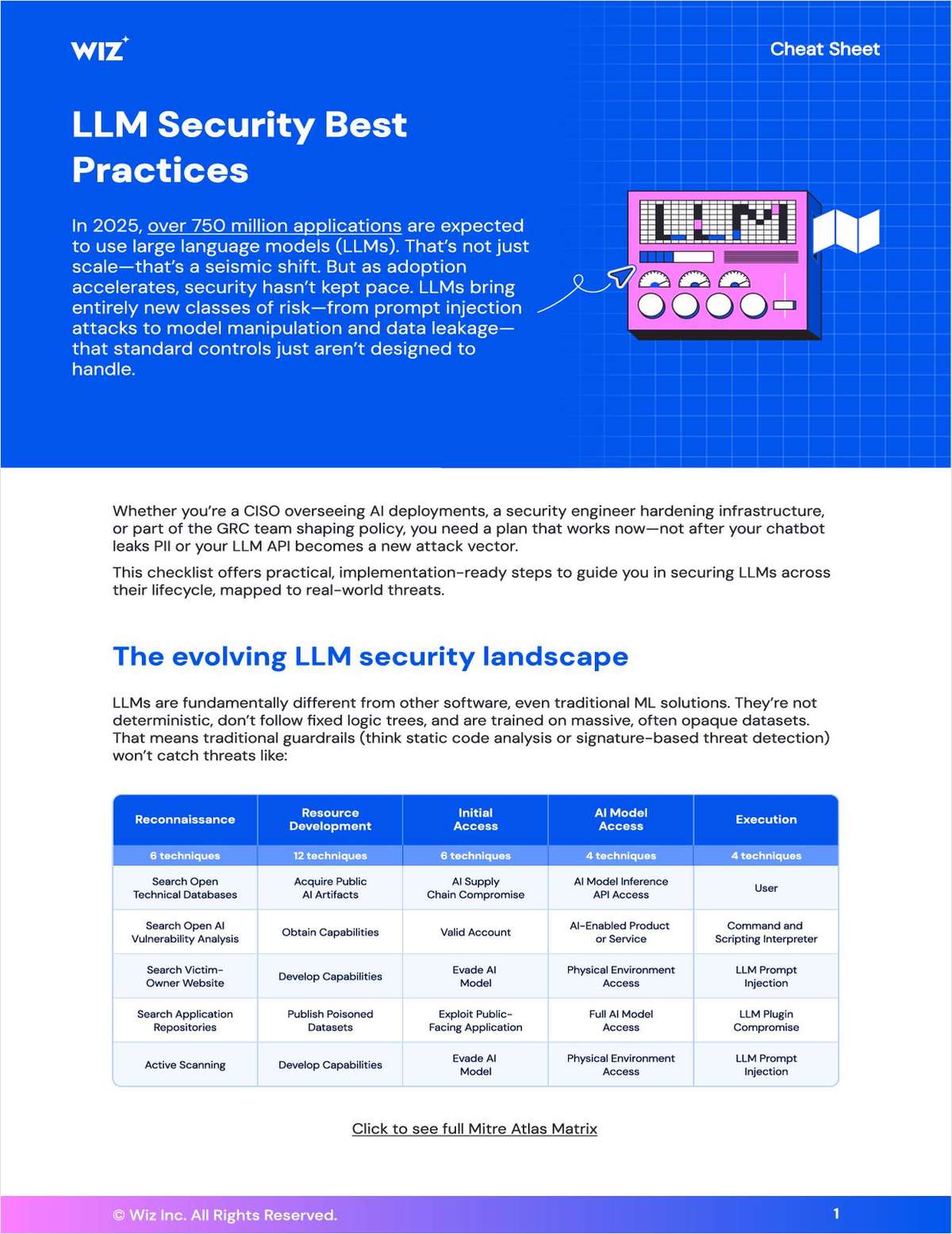

This guide outlines the emerging risks introduced by large language models (LLMs), which traditional security controls cannot adequately address due to LLMs’ non-deterministic behavior, opaque training data, and susceptibility to prompt injection, poisoning, and data leakage.

It provides a practical, threat-mapped checklist for securing LLMs across the entire lifecycle—covering data input/output protection, model integrity, infrastructure hardening, governance, monitoring, and user access control. As organizations rapidly adopt LLMs, the document emphasizes implementing high-impact safeguards such as prompt filtering, training pipeline security, API hardening, and continuous monitoring. It concludes by highlighting how AI Security Posture Management (AI-SPM) can operationalize these best practices and defend against evolving AI-driven threats.

Offered Free by: Wiz

See All Resources from: Wiz

Thank you

This download should complete shortly. If the resource doesn't automatically download, please, click here.