- Information Technology

- Data Infrastructure

- Data Tools

- Desktops, Laptops and OS

- Chip Sets

- Collaboration Tools

- Desktop Systems - PCs

- Email Client

- Embedded Systems

- Hardware and Periferals

- Laptops

- Linux - Open Source

- Mac OS

- Memory Components

- Mobile Devices

- Presentation Software

- Processors

- Spreadsheets

- Thin Clients

- Upgrades and Migration

- Windows 7

- Windows Vista

- Windows XP

- Word Processing

- Workstations

- Enterprise Applications

- IT Infrastructure

- IT Management

- Networking and Communications

- Bluetooth

- DSL

- GPS

- GSM

- Industry Standard Protocols

- LAN - WAN

- Management

- Mobile - Wireless Communications

- Network

- Network Administration

- Network Design

- Network Disaster Recovery

- Network Interface Cards

- Network Operating Systems

- PBX

- RFID

- Scalability

- TCP - IP

- Telecom Hardware

- Telecom Regulation

- Telecom Services

- Telephony Architecture

- Unified Communications

- VPNs

- VoIP - IP Telephony

- Voice Mail

- WAP

- Wi-Fi (802.11)

- WiMAX (802.16)

- Wide Area Networks (WAN)

- Wireless Internet

- Wireless LAN

- Security

- Servers and Server OS

- Software and Web Development

- .Net Framework

- ASPs

- Application Development

- Application Servers

- Collaboration

- Component-Based

- Content Management

- E-Commerce - E-Business

- Enterprise Applications

- HTML

- IM

- IP Technologies

- Integration

- Internet

- Intranet

- J2EE

- Java

- Middleware

- Open Source

- Programming Languages

- Quality Assurance

- SAAS

- Service-Oriented Architecture (SOA)

- Software Engineering

- Software and Development

- Web Design

- Web Design and Development

- Web Development and Technology

- XML

- Storage

- Agriculture

- Automotive

- Career

- Construction

- Education

- Engineering

- Broadcast Engineering

- Chemical

- Civil and Environmental

- Control Engineering

- Design Engineering

- Electrical Engineering

- GIS

- General Engineering

- Industrial Engineering

- Manufacturing Engineering

- Materials Science

- Mechanical Engineering

- Medical Devices

- Photonics

- Power Engineering

- Process Engineering

- Test and Measurement

- Finance

- Food and Beverage

- Government

- Healthcare and Medical

- Human Resources

- Information Technology

- Data Infrastructure

- Data Tools

- Desktops, Laptops and OS

- Chip Sets

- Collaboration Tools

- Desktop Systems - PCs

- Email Client

- Embedded Systems

- Hardware and Periferals

- Laptops

- Linux - Open Source

- Mac OS

- Memory Components

- Mobile Devices

- Presentation Software

- Processors

- Spreadsheets

- Thin Clients

- Upgrades and Migration

- Windows 7

- Windows Vista

- Windows XP

- Word Processing

- Workstations

- Enterprise Applications

- IT Infrastructure

- IT Management

- Networking and Communications

- Bluetooth

- DSL

- GPS

- GSM

- Industry Standard Protocols

- LAN - WAN

- Management

- Mobile - Wireless Communications

- Network

- Network Administration

- Network Design

- Network Disaster Recovery

- Network Interface Cards

- Network Operating Systems

- PBX

- RFID

- Scalability

- TCP - IP

- Telecom Hardware

- Telecom Regulation

- Telecom Services

- Telephony Architecture

- Unified Communications

- VPNs

- VoIP - IP Telephony

- Voice Mail

- WAP

- Wi-Fi (802.11)

- WiMAX (802.16)

- Wide Area Networks (WAN)

- Wireless Internet

- Wireless LAN

- Security

- Servers and Server OS

- Software and Web Development

- .Net Framework

- ASPs

- Application Development

- Application Servers

- Collaboration

- Component-Based

- Content Management

- E-Commerce - E-Business

- Enterprise Applications

- HTML

- IM

- IP Technologies

- Integration

- Internet

- Intranet

- J2EE

- Java

- Middleware

- Open Source

- Programming Languages

- Quality Assurance

- SAAS

- Service-Oriented Architecture (SOA)

- Software Engineering

- Software and Development

- Web Design

- Web Design and Development

- Web Development and Technology

- XML

- Storage

- Life Sciences

- Lifestyle

- Management

- Manufacturing

- Marketing

- Meetings and Travel

- Multimedia

- Operations

- Retail

- Sales

- Trade/Professional Services

- Utility and Energy

- View All Topics

Share Your Content with Us

on TradePub.com for readers like you. LEARN MORE

Request Your Free Research Report Now:

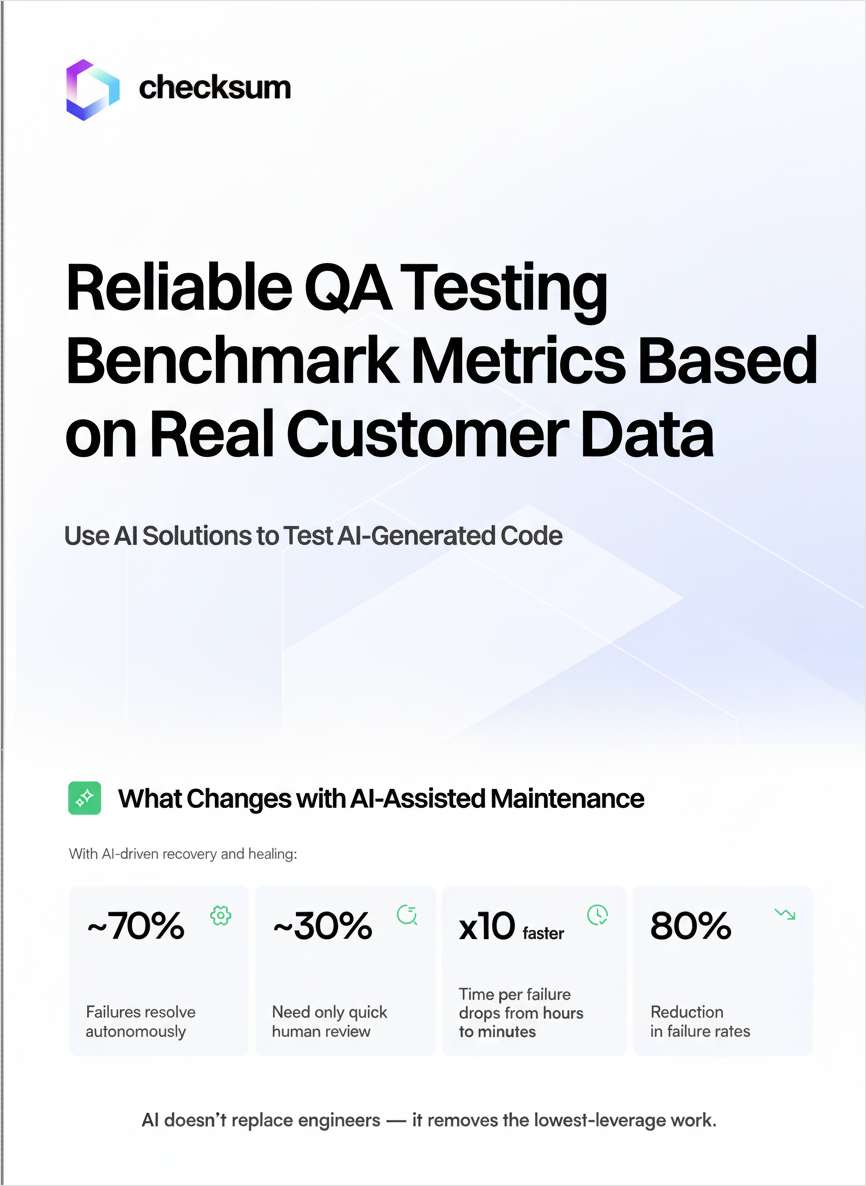

"The 2026 QA Testing Benchmark Report"

Real production metrics from 1M+ runs on what breaks, what it costs, and what AI can fix.

Web agent benchmarks often imply AI is not ready for reliable, end-to-end automation. But production teams are already running meaningful workflows at scale, because real-world reliability is built from deterministic code, recovery logic, and maintenance over time.

In this report, Checksum analyzes 1M+ production automation runs to show what actually breaks and how often. The top failure drivers are selector changes (32%), flow changes (27%), environment instability (22%), and loading or timing issues (19%).

The report also offers insight into the economics of maintenance and the impact of AI-assisted repair. In the data, AI-maintained suites cut failure rates from 14.8 to 2.7 per 100 runs (an 82% reduction), and reduce human time per failure to about five minutes on average.

Offered Free by: Checksum.ai

See All Resources from: Checksum.ai